SurgPose - a new DVRK dataset

Great news! SurgPose: a Dataset for Articulated Robotic Surgical Tool Pose Estimation and Tracking was just published by Zijian Wu, Adam Schmidt, Randy Moore, Haoying Zhou, Alexandre Banks, Peter Kazanzides and Septimiu E. Salcudean. A mixed group from University of British Columbia, Intuitive Surgical, Worcester Polytechnic Institute and Johns Hopkins University.

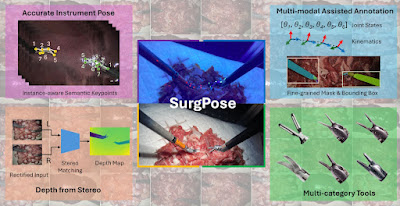

Above: "Overview diagram of the proposed SurgPose dataset. Authors collected 30 trajectories with diverse annotations using a da Vinci IS1200 system with the da Vinci Research Kit (dVRK), with ex vivo tissue background. We mark keypoints on 6 classes of surgical tools using ultraviolet (UV) reactive paint. Each trajectory is acquired under both UV and white light to extract keypoint annotations."

Abstract

Accurate and efficient surgical robotic tool pose estimation is of fundamental significance to downstream applications such as augmented reality (AR) in surgical training and learning-based autonomous manipulation. While significant advancements have been made in pose estimation for humans and animals, it is still a challenge in surgical robotics due to the scarcity of published data. The relatively large absolute error of the da Vinci end effector kinematics and arduous calibration procedure make calibrated kinematics data collection expensive. Driven by this limitation, we collected a dataset, dubbed SurgPose, providing instance-aware semantic keypoints and skeletons for visual surgical tool pose estimation and tracking. By marking keypoints using ultraviolet (UV) reactive paint, which is invisible under white light and fluorescent under UV light, we execute the same trajectory under different lighting conditions to collect raw videos and keypoint annotations, respectively. The SurgPose dataset consists of approximately 120k surgical instrument instances (80k for training and 40k for validation) of 6 categories. Each instrument instance is labeled with 7 semantic keypoints. Since the videos are collected in stereo pairs, the 2D pose can be lifted to 3D based on stereo-matching depth. In addition to releasing the dataset, we test a few baseline approaches to surgical instrument tracking to demonstrate the utility of SurgPose. The code and dataset will be publicly available: https://surgpose.github.io/.

Comments